U.S. to Collect Genetic Data to Hone Care

Obama on New Medical Funding Initiative

By ROBERT PEARJAN. 30, 2015

By AP on Publish Date January 30, 2015. Photo by Jabin Botsford

http://www.nytimes.com/2015/01/31/us/obama-to-unveil-research-initiative-aiming-to-develop-tailored-medical-treatments.html

Medical Surveillance

TI's cannot get medical care. Someone interferes and influences the doctors, nurses and staff to be rude, argumentative, obstructive and never give you the care you need.

Part of the TI program is "medical surveillance" which is surveillance of the Target's medical conditions, tests, office visits, literally everything. There is nothing private about a TI's medical records. they cannot get accurate testing for their poisonings and toxic substances that are put in their homes and cars. They are literally blackballed in the medical community. TI's are surveilled in their homes using Wireless Sensor Networks which interact with implants and track the person around the home. All phone calls are hacked so the TI cannot go to the doctor without an agent or contractor getting there first to prepare the doctor to mistreat the Target and give them bad medical care, withhold prescriptions, give them exorbitantly priced prescriptions or withhold test results and treatment. All medical care is corrupted.

RESEARCH 3-12-2014 WIRELESS SENSOR NETWORKS

To Targeted Individuals:

Indications of possible Wireless Sensor Network Surveillance

Are you being tortured in your home? Do your torturers vibrate and shock you when you are laying on your bed? Do your torturers know exactly where your body parts are when you get in bed? Do they work on you with various frequencies which make your arms and legs move by themselves? Are you being electronically raped when you go to bed? Do you vibrate all over? And do these things happen every day?

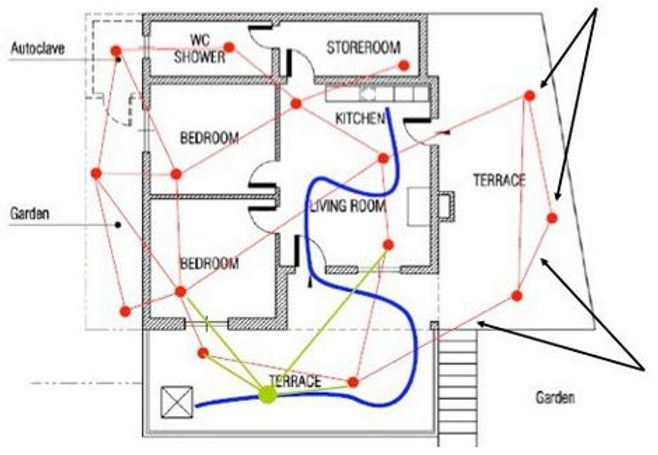

You may have a WIRELESS SENSOR NETWORK or a MIMO radio (Multiple Input Multiple Output) COFDM IP Mesh NetNode or WiFi System installed in your home which provides your “handlers” a picture of you. The antenna beamforming that aims radiation indicates that this system can be programmed from somewhere outside the home. Pictures can be made using only radio frequency from systems with as many as 32 nodes. Better pictures than this may be possible with actual cameras which also form a WIRELESS CAMERA NETWORK where they take pictures which can be put together to form a hologram, a 3D picture.

“Handlers” can be anyone from the military, the Community Policing Program (police, firemen, paramedics, utility workers, corporate partners, cohorts/gangstalkers), or neighbors who have been given the electronic equipment to monitor and hurt you as part of a military type operation. Americans are being given the weapons and are being paid to torture, burn, shock, harass, surveil and gangstalk other Americans, all under the twisted guise of fighting those pesky 9-11 related domestic terrorists.

Have you stated you are going to Publix or Walmart and the gangstalkers are waiting in the parking lot for you? Your wireless sensor network provides your “handlers” with audio of everything that goes on in your home. They know when you eat, go to the bathroom, when you make love, everything. Privacy is just not for the “modern” terrorist.

What are Wireless Sensor Networks?

A wireless sensor network (WSN) of spatially distributed autonomous sensors to monitor physical or environmental conditions, such as temperature, sound, pressure, etc. and to cooperatively pass their data through the network to a main location. The more modern networks are bi-directional, also enabling control of sensor activity and programming of the system from a distance. The development of wireless sensor networks was motivated by military applications such as battlefield surveillance; today such networks are used in many industrial and consumer applications, such as industrial process monitoring and control, machine health monitoring, and so on.

The WSN is built of "nodes" – from a few to several hundreds or even thousands, where each node is connected to one (or sometimes several) sensors. Each such sensor network node has typically several parts: a radio transceiver with an internal antenna or connection to an external antenna, a microcontroller, an electronic circuit for interfacing with the sensors and an energy source, usually a battery or an embedded form of energy harvesting. A sensor node might vary in size from that of a shoebox down to the size of a grain of dust, although functioning "motes" of genuine microscopic dimensions have yet to be created. The cost of sensor nodes is similarly variable, ranging from a few to hundreds of dollars, depending on the complexity of the individual sensor nodes. Size and cost constraints on sensor nodes result in corresponding constraints on resources such as energy, memory, computational speed and communications bandwidth. The topology of the WSNs can vary from a simple star network to an advanced multi-hop wireless mesh network. The propagation technique between the hops of the network can be routing or flooding.[1][2]

Wireless Sensor Networks may be part of a SMART GRID:

It only takes the installation of a small card that controls the audio/visual radio frequency controlled nodes in the home to provide surveillance of all the rooms in your house. The card can be installed in your smart meter.

New technology that has been purportedly developed for medical or defense use is being used against people called Targeted Individuals. There is a program that looks very much looks like it could be adapted so very easily for targeting people in their homes from Harvard University called Code Blue: Wireless Sensors for Medical Care.

Notice the parts I’ve marked in red which show that these are being paid for partly by the U.S. Army. These sensors can send alerts to PDAs, PC’s, police and paramedics. Paramedics are now part of the Community Policing Program I researched at http://www.skizit.biz/2014/03/08/construction-of-a-police-state-in-america/.

Please take the time to review this material because it shows how the police, firemen and paramedics are part of Community Policing, along with corporate partners and “cohorts”, which I believe is what they are calling gangstalkers. If you are being gangstalked, you may have noticed that quite a few of them look like veterans. That’s because they have called in veterans as watchers as cohorts.

CodeBlue: Wireless Sensors for Medical Care

http://fiji.eecs.harvard.edu/CodeBlue

[Mercury mote] We are exploring applications of wireless sensor network technology to a range of medical applications, including pre-hospital and in-hospital emergency care, disaster response, and stroke patient rehabilitation (see the related Mercury project as well).

Click here for publications

Recent advances in embedded computing systems have led to the emergence of wireless sensor networks, consisting of small, battery-powered "motes" with limited computation and radio communication capabilities. Sensor networks permit data gathering and computation to be deeply embedded in the physical environment. This technology has the potential to impact the delivery and study of resuscitative care by allowing vital signs to be automatically collected and fully integrated into the patient care record and used for real-time triage, correlation with hospital records, and long-term observation.

This project is supported by grants from the National Science Foundation, National Institutes of Health, U.S. Army, as well as generous gifts from Sun Microsystems, Microsoft Corporation, Intel Corporation, Siemens AG, and ArsLogica.

Wireless Vital Sign Sensors

We have developed a range of wireless medical sensors based on the popular TinyOS "mote" hardware platforms. A wireless pulse oximeter and wireless two-lead EKG were among the first two sensors developed by our lab. These devices collect heart rate (HR), oxygen saturation (SpO2), and EKG data and relay it over a short-range (100m) wireless network to any number of receiving devices, including PDAs, laptops, or ambulance-based terminals. The data can be displayed in real time and integrated into the developing pre-hospital patient care record. The sensor devices themselves can be programmed to process the vital sign data, for example, to raise an alert condition when vital signs fall outside of normal parameters. Any adverse change in patient status can then be signaled to a nearby EMT or paramedic. These vital sign sensors consist of a low-power microcontroller (Atmel Atmega128L or TI MSP430) and low-power digital spread-spectrum radio (Chipcon CC2420, compliant with IEEE 802.15.4, 2.4 GHz, approximate range 100 meters, data rate about 80 Kbps). The devices have a small amount of memory (4-10 KB) and can be programmed (using the TinyOS operating system) to sample, transmit, filter, or process vital sign data. These devices are powered by 2 AA batteries with a lifetime of up to several months if programmed appropriately. The basic hardware is based on the MicaZ and Telos sensor nodes, described above, and a custom sensor board integrating the pulse oximeter or EKG circuitry is attached to the mote devices.

Wireless pulse oximeter sensor.

Wireless two-lead EKG.

Accelerometer, gyroscope, and electromyogram (EMG) sensor for stroke patient monitoring.

CodeBlue is also being used by the AID-N project at Johns Hopkins Applied Physics Laboratory, which is investigating a range of technologies for disaster response. The AID-N wireless sensors (which run the CodeBlue software) include an electronic "triage tag" with pulse oximeter, LCD display, and LEDs indicating patient status; a packaged version of our two-led EKG mote, and a wireless blood pressure cuff. The ETag sensor hardware was developed by Leo Selavo at University of Virginia.

UVa/AID-N "eTag" wireless triage tags, with pulse oximeter, LEDs to indicate patient triage status, and control buttons.

UVa/AID-N wireless two-lead EKG (same as above, but with case).

UVa/AID-N wireless blood pressure cuff.

Mercury: Wearable Sensors for Motion Analysis

In collaboration with the Motion Analysis Laboratory at the Spaulding Rehabilitation Hospital, we are developing developing the Mercury system, which is designed to support high-resolution motion studies of patients being treated for neuromotor conditions such as Parkinson's Disease, stroke, and epilepsy. See the Mercury project page for more details.

Our sensor hardware designs are available under an "open source" license to research groups that are interested in experimenting with these devices. We are actively pursuing research collaborations with other medical groups, disaster response teams, and companies interested in this technology. Please contact us for more information.

CodeBlue software platform

CodeBlue architecture for emergency response.

In addition to the hardware platform, we are developing a scalable software infrastructure for wireless medical devices, called CodeBlue. CodeBlue is designed to provide routing, naming, discovery, and security for wireless medical sensors, PDAs, PCs, and other devices that may be used to monitor and treat patients in a range of medical settings. CodeBlue is designed to scale across a wide range of network densities, ranging from sparse clinic and hospital deployments to very dense, ad hoc deployments at a mass casualty site. CodeBlue must also operate on a range of wireless devices, from resource-constrained motes to more powerful PDA and PC-class systems. For more information, please see the IEEE Pervasive Computing article about CodeBlue or this technical report with more details.

Part of the CodeBlue system includes MoteTrack, a system for tracking the location of individual patient devices indoors and outdoors, using radio signal information. In MoteTrack, a hospital, clinic, or other area is outfitted with a set of fixed radio beacon nodes that are used to calculate the 3D position of the wireless sensors, which may be attached to patients, carried by physicians or nurses, or attached as "location tags" to medical equipment. MoteTrack has been demonstrated in a building-wide deployment at Harvard and yields an 80th percentile error of about 2 meters, which is more than adequate for many location-tracking applications.

The CodeBlue system is currently under development and we anticipate a source code release soon. The MoteTrack system is currently available for download at the link above.

Our research focuses on the following areas:

Integration of medical sensors with low-power wireless networks

Wireless ad-hoc routing protocols for critical care; security, robustness, prioritization

Hardware architectures for ultra-low-power sensing, computation, and communication

Interoperation with hospital information systems; privacy and reliability issues

3D location tracking using radio signal information

Adaptive resource management, congestion control, and bandwidth allocation in wireless networks

We are also investigating wide-area event delivery infrastructures for medical care, as part of the Harvard Hourglass project. Such a system will allow seamless access to patient care data by EMTs, emergency department personnel, and other physicians through a variety of interfaces, including handheld PDA and Web-based clients. In collaboration with 10Blade, we are integrating Vital Dust sensors into iRevive, a PDA-based patient care record database. The combined system will allow real-time vital sign capture and triage, automatically inserting time-stamped vital sign data in the patient care record (PCR) prepared by EMTs. This will lead to more accurate reporting and a significant reduction of paperwork for EMSs. Our PDA-based triage application displays vital signs for multiple patients and immediately alerts the EMT to a change in patient status.

Software and hardware release

You can download a prototype release of the CodeBlue software and hardware description files here:

http://www.eecs.harvard.edu/~mdw/proj/codeblue/release

We have also created a public mailing list for users of the CodeBlue software. Use this list for questions, comments, and discussion about the system as well as to receive notification of updates.

To subscribe, visit this page:

https://www.eecs.harvard.edu/mailman/listinfo/codeblue-users

HOURGLASS

An Infrastructure for Connecting

Sensor Networks and Applications

The Harvard Hourglass project is building a scalable, robust data collection system to support geographically diverse sensor network applications. Hourglass is an Internet-based infrastructure for connecting a wide range of sensors, services, and applications in a robust fashion. In Hourglass, streams of data elements are routed to one or more applications. These data elements are generated from sensors inside of sensor networks whose internals can be entirely hidden from participants in the Hourglass system. The Hourglass infrastructure consists of an overlay network of well-connected dedicated machines that provides service registration, discovery, and routing of data streams from sensors to client applications. In addition, Hourglass supports a set of in-network services such as filtering, aggregation, compression, and buffering stream data between source and destination. Hourglass also allows third party services to be deployed and used in the network.

Overview

Sensor networks are becoming a pervasive part of our environment, and promise to deliver real-time data streams for applications such as environmental monitoring, structural engineering, and health care. Existing sensor networks are single purpose in that they communicate with a limited number of external systems through proprietary interfaces.

To address these issues, we are developing a scalable, robust data collection system, called Hourglass. The Hourglass infrastructure is a collection of Internet-connected systems that provides mechanisms for discovery of sensor network data and routes data streams from providers to requesters in a fault tolerant, delay-sensitive fashion. Sensor networks and end-user applications interface with Hourglass to publish locally-generated data streams or request streams of interest. The systems making up the Hourglass "core" are well-connected, well-provisioned servers maintained by organizations providing the Hourglass service.

Apart from providing a robust stream dissemination infrastructure, Hourglass supports a range of in-network services to facilitate efficient discovery, processing, and delivery of sensor network data. These services include filtering, compression, aggregation, and storage of event streams within the Hourglass. Hourglass dynamically adapts to changing network conditions and node failures by allocating in-network services to nodes to meet performance and reliability targets. For example, to reduce bandwidth requirements, a filtering service can be instantiated near an event source to filter out non-critical data.

The Hourglass architecture leverages recent research in overlay networks and peer-to-peer architectures for constructing self-organizing, robust services from a collection of hosts distributed across the Internet. Our approach differs from previous work in that it focuses on real-time event delivery for sensor network applications, the incorporation of mobile hosts and intermittent connectivity to clients, and dynamic in-network processing.

To facilitate the implementation of large-scale stream-based applications, such as Hourglass, we propose a novel underlying network infrastructure called a Stream-Based Overlay Network (SBON). SBONs are intended to support a new class of Internet-based applications that pull data from one or more streaming sources on the Internet and process the data in the network as it is delivered to potentially multiple end-user applications. By abstracting away the details of data path optimization, service naming and service discovery, SBONs will greatly simplify the development of Internet-based stream processing systems.

OTHER INTERESTING STUFF (Incomplete):

http://www.slideshare.net/Vetruve/wireless-sensors-and-control-networks

http://scholar.lib.vt.edu/ejournals/JOTS/v39/v39n2/pan.html

The current common sensing methods, such as infrared light emitters and sensors, radio frequency identification, Bluetooth, Zigbee, WiFi, GPS, and depth sensors (such as Microsoft Kinect), all have various configurations and techniques involving detection of proximity (Kumaragurubaran, 2011). But regarding the above sensing methods, the infrared (IR) sensor and depth sensor (Kinect) are the only two methods applied in body sensing without a hand-held device (Hill, 2012). Previous studies (Hu, Jiang, & Zhang, 2008; Ma, 2012; Yamtraipat, Khedari, Hirunlabh, & Kunchornrat, 2006) have shown the effects of using IR sensors for saving electricity. However, IR sensor parts are often interfered by passing dogs, cats, or other animals, causing abnormal activation of the devices (Pan, Lin, & Wu, 2011). In contrast, Kinect can distinguish human presence and has a wider sensor range area than IR. Users also can reconfigure them according to individual needs to develop detection contexts for electronic devices required in different venues (Pan, Tu, & Chien, 2012).

Kinect is a human-body sensing input device by Microsoft for the Xbox 360 video game console and Windows, which enables users to interact with the Xbox 360 without the need for a hand-held controller; it is also a 3D depth sensor that integrates three lenses (Pan, Chien, & Tu, 2012). The IP Power system, launched by the AVIOSYS Corporation, can control the power source switches using the Internet and has four power ports, which can independently manage power sources for four electrical devices (Aviosys International Inc., 2011). In this study, we used the source code drivers released by PrimeSense to write a program that controlled the power source switch of the IP power; the operation would enable the electrical device to actively switch its power on or off based on body-sensing, and thereby achieve energy savings by switching off the electrical device when no one was around to use it.

[The signal flow and electricity source flow are shown in this flow chart of the KIP System. The electronic marquee, IP power and the laptop are all receiving an electric course from the EZ-RE Smart Meter and the laptop is receiving a signal flow from it as well. The loptop is sending a signal flow to the IP power which is sending a singal flow to the electronic marquee. The Kinect is sending both an electric source to the computer as well as a signal flow.]

HOW TO HACK A SMART METER:

http://www.technologyreview.com/hack/414820/meters-for-the-smart-grid/

Large-Scale Demonstration of Self-Organizing Wireless Sensor Networks

http://webs.cs.berkeley.edu/800demo/

On Aug. 27, 2001 researchers from the University of California, Berkeley and the Intel Berkeley Research Lab demonstrated a self-organzing wireless sensor network consisting of over 800 tiny low-power sensor nodes. This demonstration highlights work at Berkeley that is funded, in part, by the Defense Advanced Research Project Agency (DARPA) Network Embedded Softrware Technology program and is a leading component of the CITRIS research agenda, as well as the collaboration with Intel. The demonstration was live, involving most of the audience attending the kickoff keynote of the Intel Developers Forum given by Dr. David Tennenhouse, Intel VP and Director of Research.

A sensor network application

The basic concept of a self-organized sensor network was first demonstrated at a moderate scale on stage by Professor David Culler (University Director of the Intel Berkeley Research Lab) and several students from UCB and UCLA. A 1x1.5 inch wireless sensor node was carried on stage and activated by each of several students. As the nodes were turned on, their icons appeared on a display showing the state of the network. An example screenshot appears below. The lines indicate which nodes can 'hear' each other and communicate via their radios. The lines highlighted as green are the links chosen by the network to form an ad hoc, multihop routing structure to transmit sensor data to the display. This structure grew as the nodes were activated and continued to adapt to changing conditions. The background showed the network's 'perception' of light intensity on the stage. Initially, it was white at full illumination. Taking the stage lights down brought it to dark. When selective spots were brought up, the corresponding regions when light. As the students walked away, the network took itself apart.

Live Ad Hoc Sensor Network showing Light Intensity

Tiny Nodes

A large-scale demonstration of the networking capability utilized tiny nodes, just the size of a quarter, that were hidden under 800 chairs in the lower section of the presentation hall. The core is a 4 mhz low power microcontroller (ATMEGA 163) providing 16 KB of flash instruction memory, 512 bytes of SRAM, ADCs, and primitive peripheral interfaces. A 256 KB EEPROM serves as secondary storage. Sensors, actuators, and a radio network serve as the I/O subsystem. The network utilizes a low-power radio (RF Monolithics T1000) operating at 10 kbps. The node had fours senses: light, temperature, battery level, and radio signal strength. It can actuate two LEDS, control the signal strength of the radio, transmit and receive signals. By adjusting the signal strength, the radio cell size can be varied from a couple of feet to tens of meters, depending on physical environment. A second microcontroller is provided to all the core microcontroller to be reprogrammed over the network. The entire system consumes about 5 mA when active. The radio and the microcontroller consume about as much power as a single LED. In the passive mode, they consume only a few microamps while still checking for radio or sensor stimuli that should cause them to 'wake up'.

A handful of network sensor 'dots'

TinyOS

The key innovation is a novel operating system, including network stack, designed especially resource constrained environments where data and control have to be moved rapidly beteen various sensors, actuators, and the network. TinyOS is a component-based, event-driven operating system framework that starts at a few hundred bytes for the scheduler and grows to complete, network applications in a few kilobytes. The demonstration application consisted of nine software components. At the lowest level are abstraction components for the network, the sensors and the LEDs. Building upon this is a very efficient network stack which reaches from modulating the physical link in software up to an "Active Message" application programming interface. One application component provides multihop routing, where nodes can communicate to other nodes that are several radio hops away by having intermediate nodes route and retransmit their packets. Other application components sample sensor data and process it in various ways. Still others manage the basic health of the node or network.

A Self-Organizing Network

For this demonstration, one of the application components provided network discovery and multihop broadcast. A command packet can be transmitted from any node that needs to become the root of a logical network. In the demonstration, this was the node connected to the laptop on stage. Nodes receiving that packet will selectively retransmit the command, allowing the request to 'ripple' out over many levels. The network supported several such commands, including network discovery where each node records the identity of a 'parent' closer to the root. As this request propagates out, a routing tree is grown that spans the network. Many other actions can be requested of the network, such as illuminating LEDs, accessing data, changing modes, or going to sleep. Waking up the network from the very low power sleep mode is especially subtle, because it must use only a minute amount of energy and yet avoid false-positives, which would awaken the entire network. The entire application in the demo occupied about 8 KB.

The intended plan for the demo was to begin with all the nodes asleep. A wakeup request would be propagated through the network, causing each node to light yellow during the boot phase and then red when ready. A network discovery operation would show yellow briefly as the discovery wavefront propagated across the network and then turn off the red LED. Because the discovery takes only a fraction of a second, we would then issue a sequence of commands to illuminate level 1, then level 2, etc. at five second intervals. Test runs in a near empty auditorium discovered a network of 800 nodes that was four levels deep. In the live demonstration, a few folks had found their nodes, taken them apart, and played with them - causing the network to wake up, so everything was awake when the audience pulled them from under the chairs. The discovery went by so quickly no one saw it and the lights were off. We had to tell the nodes to turn their lights back on and rediscovery the network, which turned out to be eight levels deep. Actuating the crowd level-by-level showed the complex structure of such a large, self-organized network.

Wireless Sensor Network Used for Medical Surveillance

This is a great place to add a tagline.

Tell customers more about you. Add a few words and a stunning pic to grab their attention and get them to click.

This space is ideal for writing a detailed description of your business and the types of services that you provide. Talk about your team and your areas of expertise.

Add Your Title

This is a great place to add a tagline.

Tell customers more about you. Add a few words and a stunning pic to grab their attention and get them to click.

This space is ideal for writing a detailed description of your business and the types of services that you provide. Talk about your team and your areas of expertise.